From Vibes to Visibility: Why We Invested in Traceloop

Shining a light on the dark corners of AI agents

Modern software already stretches software engineers thin—with multi-cloud deployment, microservices, serverless compute, and real-time data streams. The rise of AI agents makes that observability gap even wider. Prompt chains, vector database lookups, and autonomous actions introduce novel failure modes—hallucinations, drift, and runaway spend—dimensions that classic APM and observability tools were never designed to handle. And when an AI agent misfires, users don’t file a bug report—they disengage.

Separately, OpenTelemetry (OTEL) has emerged as the lingua franca for pulling modern observability signals together. In fact, ~61% of engineering leaders now call OTEL critical to their observability strategy.

It would be incredibly powerful to combine observability for AI workloads and OTEL… enter Grand’s latest investment: Traceloop.

Traceloop’s $6.1M Seed Round

We’re thrilled to announce our participation in Traceloop’s $6.1M seed round, led by Sorenson Capital and Ibex Investors, with participation from Y Combinator, Samsung NEXT, and absolute rockstar roster of angel investors including the CEOs of Datadog, Elastic, and Sentry.

This capital will accelerate Traceloop’s enterprise platform, deepen their AI evaluation stack, and scale the open-source momentum behind OpenLLMetry, their fast-growing OSS framework built on OpenTelemetry.

Traceloop joins Grand’s growing portfolio of Commercial Open Source (COSS) companies, including Astronomer, Payload, Tembo, and Comp AI, and fits squarely into our COSS investment thesis.

Why We Invested

Strong Founders with Real AI Battle Scars

We’ve been following Traceloop since their pre-seed in 2023 and built conviction in both the market need and the team. Founders Nir Gazit and Gal Kleinman bring an ideal blend of AI and enterprise infrastructure DNA. At Google, Nir built predictive models powering YouTube, Maps, and Photos. At Fiverr, Gal led ML platforms powering real-time search and discovery.

The idea for Traceloop emerged from Nir and Gal’s frustration: LLMs were powerful—but flaky. They’d see regressions without any code changes. Testing was manual, often done in spreadsheets. No tools existed to engineer prompt-based systems the way the teams they were part of built software. So they built one…

AI Observability is a Greenfield Category

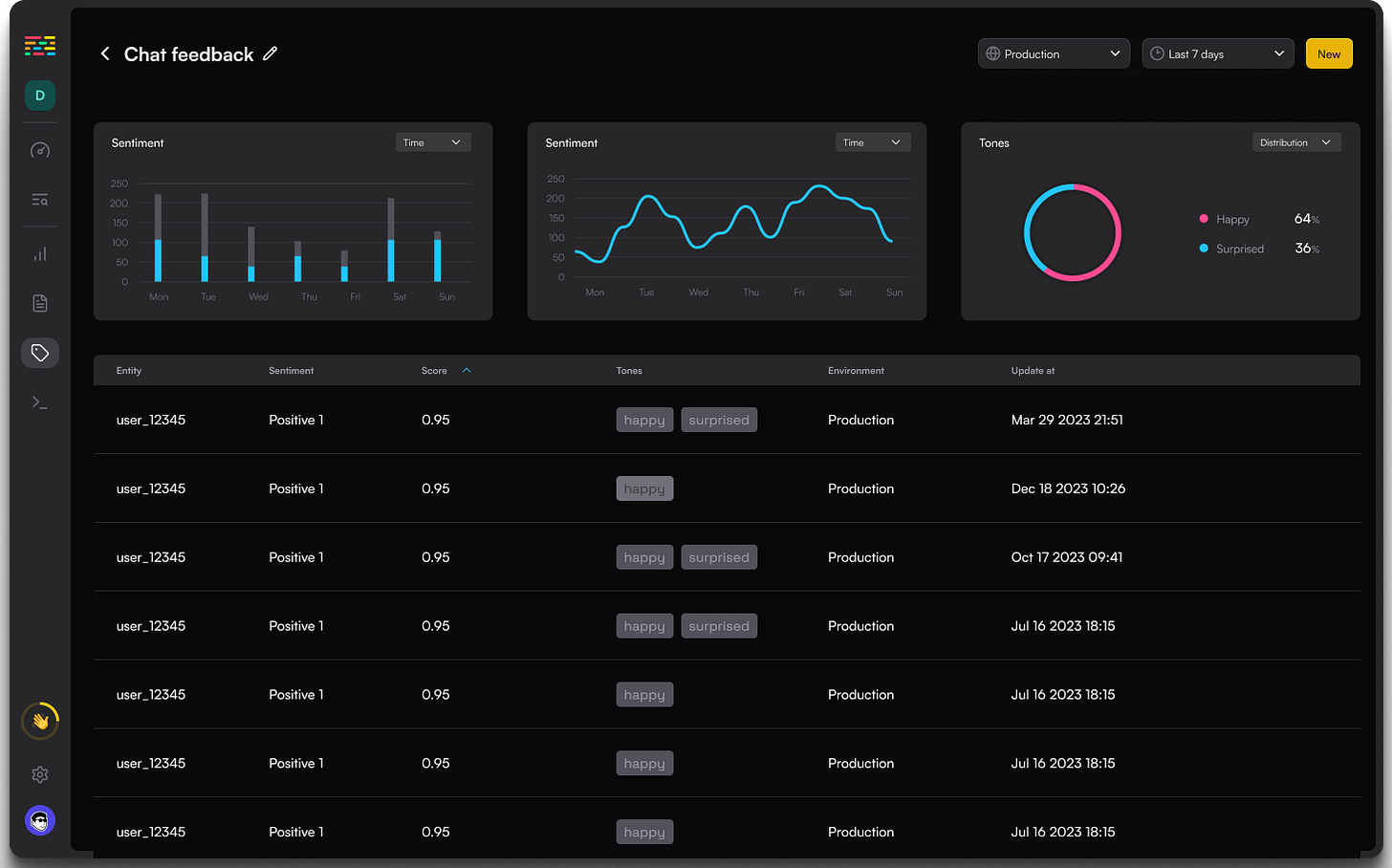

If CI/CD brought rigor to software engineering, Traceloop is doing the same for LLMs. Their platform traces every token, auto-scores output against your rules, and flags regressions—before users experience issues.

The result? Teams can version prompts like code, switch model providers without surprises, detect hallucinations, and ship with confidence—not vibes.

Open Source Flywheel

Traceloop’s OSS traction speaks for itself:

- 500K+ monthly downloads of Traceloop’s open source SDK, OpenLLMetry

- 50K+ weekly installs

- 5,600+ GitHub stars

- 60+ active contributors

To date, the majority of Traceloop’s paid users started using open source OpenLLMetry, and then graduated to using Traceloop’s commercial platform.

Enterprise Adoption + Product Velocity

Traceloop isn’t just collecting telemetry—it’s turning it into actionable insights. Their platforms shines when teams want to test-drive different foundation models. Imagine rerouting calls from OpenAI’s o3 to Anthropic’s Claude Sonnet 4: the API swap is trivial, but every prompt needs a tune-up and the responses will shift. Traceloop’s new console gives engineers the metrics and dashboards they need to see how the system behaves once that change goes live. The results can be found in Traceloop’s skyrocketing adoption by software vendors and customers:

- Cisco, Dynatrace, and IBM use Traceloop for LLM observability and real-world model validation (e.g., on platforms like Amazon Bedrock and watsonx.ai)

- Customers like Miro use Traceloop to experiment with models like GPT-4.1 without impacting users, and to flag edge cases early

This validation, from open source adoption to Fortune 500 use, made us confident Traceloop can not only define, but lead the AI Observability category.

Built on Standards That Win

Traceloop’s OpenLLMetry SDK enables engineerins and operations team to remain vendor-neutral, as it integrates with existing observability backends like Grafana, Datadog, Honeycomb, and anybody else that supports OTEL. This aligns with Grand’s conviction that the future of AI infrastructure will be open, composable, transparent, and developer-first.

OpenLLMetry supports over 26 LLM providers (OpenAI, Anthropic, Mistral, etc.), vector DBs (Pinecone, Weaviate, LanceDB), and orchestration frameworks like LangChain, CrewAI, and Haystack. It’s even evolving to support emerging protocols like Anthropic’s MCP and Google’s Agent2Agent.

What’s Next

With this round, Traceloop is doubling down on agentic flows, multimodal model support, and enterprise security readiness. Their platform is now generally available—and if you’re building with LLMs, you can start tracing today.

If you believe observability is just as important in AI as it is in traditional software, this is your moment.

Onward, Nathan Owen

Partner, Grand Ventures

PS: For founders, deciphering the commercial viability of open-source projects presents a formidable challenge. If you are a founder and think your OSS project has what it takes to become a venture-scale startup, drop me a note at nathan@grandvcp.com and let’s chat. We’ve walked this path with a bunch of companies that have successfully made the journey.

If you geek out on Observability, my four-part series starts here: The Evolution of Observability – From Monitoring to Intelligence